Hierarchical Reinforcement Learning for Robustness, Performance and Explainability

This is my MSc Thesis / University of Edinburgh12. Here is a short video:

In general, a Hierarchical Reinforcement Learning is a group of RL models organised in a hierarchy. Every model is specialised in certain actions, and there is one model which takes care of solving the main task, usually called the “controller”. Given the hierarchy organisation, every model in a higher level uses some of the policies (or models) in lower levels to accomplish its specialised task. More specifically there are many different types of HRL models, some specialised in learning the policies, some others in learning the hierarchy, etc (to see a more in-depth description of HRL see this interesting article from The Gradient, link). Each one uses different concepts to solve the problem. In this case, I am focusing on pre-trained pre-specified hierarchy to test different characteristics of such models.

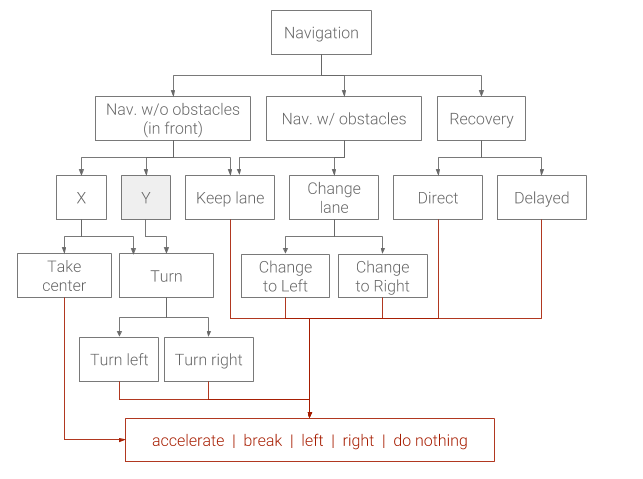

Take for example the hierarchy (figure 1) could be used to drive a car in a simplified environment, here the policy “Avoid Obstacle” can make use of the sub-policies “Change Lane” or “Stay in Lane” to accomplish its goal. In the end, every policy uses a sub-policy or directly the raw actions of the agent, in this case, accelerate, brake, turn left, right or do nothing.

There are several benefits to use HRL. We explore the benefits regarding Robustness, Explanibility and Performance. Thanks to the modularity coming from using a Hierarchy of Policies, for example, if the environment changes, the whole hierarchy of policies do not have to change as well, just the affected part, which can mean significant improvements in training times depending on the complexity. Another example is to improve its performance, suppose we found a policy which is not behaving as expected in a specific case, the solution is to modify somehow (e.g. more training) only that policy, and all the other policies stay the same in the hierarchy.

You can run the model above with little steps in the repository of the project. I invite you to visit it: https://github.com/NotAnyMike/HRL

-

Currently working on this project, I will keep updating this post based on the progress of the thesis. ↩

-

The cover picture is taken from the repo of the project ↩